How to Disable Data Collection in ChatGPT

When you sign up for ChatGPT, data collection is enabled by default. That means your prompts and conversations may be used to train OpenAI's models. While OpenAI says it takes steps to protect your privacy, many people aren't comfortable with their personal or company data being included in AI training.

Step-by-Step: Turn It Off

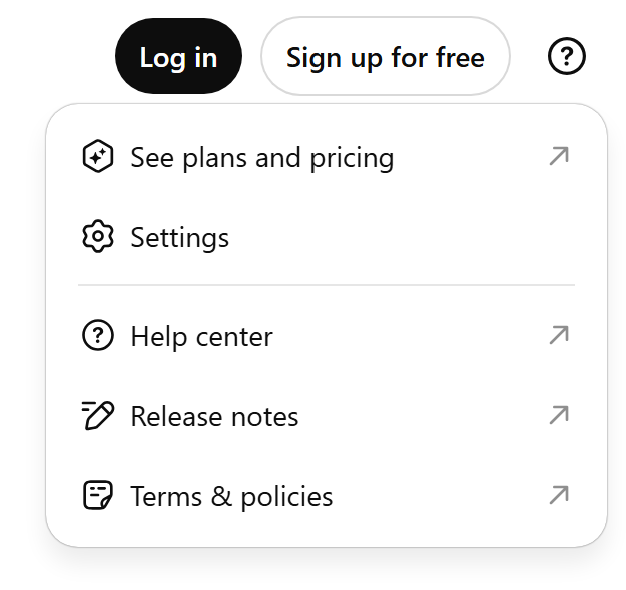

- Visit chatgpt.com and sign in.

- Click the question mark icon (❓) in the top-right corner.

- Click Settings.

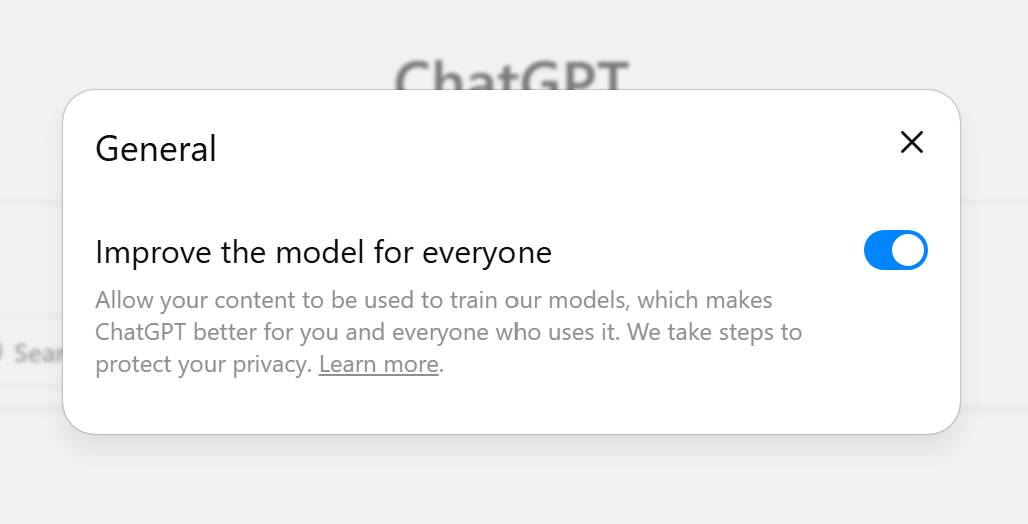

- Under Data Controls, toggle off Improve the model for everyone.

“Allow your content to be used to train our models, which makes ChatGPT better for you and everyone who uses it. We take steps to protect your privacy.”

Why This Matters

Even if you’re just experimenting, your chats may include sensitive information—customer details, internal code names, or financial data. Once submitted, you lose control over how it might be stored or processed. Turning off data collection reduces your risk surface and aligns with security best practices.

Legal Note from OpenAI

OpenAI’s legal section clarifies an important exception:

“Even if you’ve opted out of training, you can still choose to provide feedback to us about your interactions with our products (for instance, by selecting thumbs up or thumbs down on a model response). If you choose to provide feedback, the entire conversation associated with that feedback may be used to train our models.”

Translation: if you use the thumbs up/down feedback, the whole conversation tied to that feedback can still be used for training—even if you opted out. Keep that in mind before submitting feedback on a sensitive thread.

Take Control of Your Data

- Disable data collection in ChatGPT as shown above.

- Avoid pasting sensitive information into AI tools when possible.

- Use protective controls that run locally (no cloud logging).

Try KanActive AI Lite

KanActive AI Lite helps reduce prompt-level risk by detecting PCI data (like credit card numbers) in real time inside ChatGPT and alerting you before submission. It's local-first, with no signup and no server-side logging.